February 7, 2026

When Automation Gets Complicated, Simplify: My Pivot from n8n to Python

I've always loved automation. Not just the productivity gains, but the feeling of watching a system run itself. So when I discovered n8n, a visual no-code automation tool, I was hooked.

The promise was compelling: build complex workflows without writing code. Drag, drop, connect. I started small with a script to fetch pull requests, another to post comments. Then I got ambitious.

This started as a developer problem. It became a leadership lesson about knowing when to pivot.

What if I could automate our entire code review process? Multi-stage reviews with quality gates. Jira integration to fetch requirements. Git history analysis for context. Automatic fix PRs for common issues.

I built it. It worked. And then, slowly, it started to fight me.

When Visual Becomes Viscous

Here's what the system looked like at its peak:

Stage 0: Initial questions workflow

- Fetch open PRs from GitHub

- Extract Jira ticket from branch name

- Fetch ticket details and acceptance criteria

- Analyze git history for modified files

- Generate contextual questions

- Post to PR as comment

Stage 1: Architecture review (quality gate)

- Run Claude analysis on code changes

- Score against quality criteria (DRY, separation of concerns, patterns)

- If score ≥ 7.0, proceed to Stage 2

- If score < 7.0, stop and provide improvement guidance

Stage 2: Parallel detailed reviews

- Documentation review with mentoring notes

- Testing review with coverage analysis

- Learning opportunities identification

- Generate automated fix PRs where possible

It was sophisticated. Maybe too sophisticated.

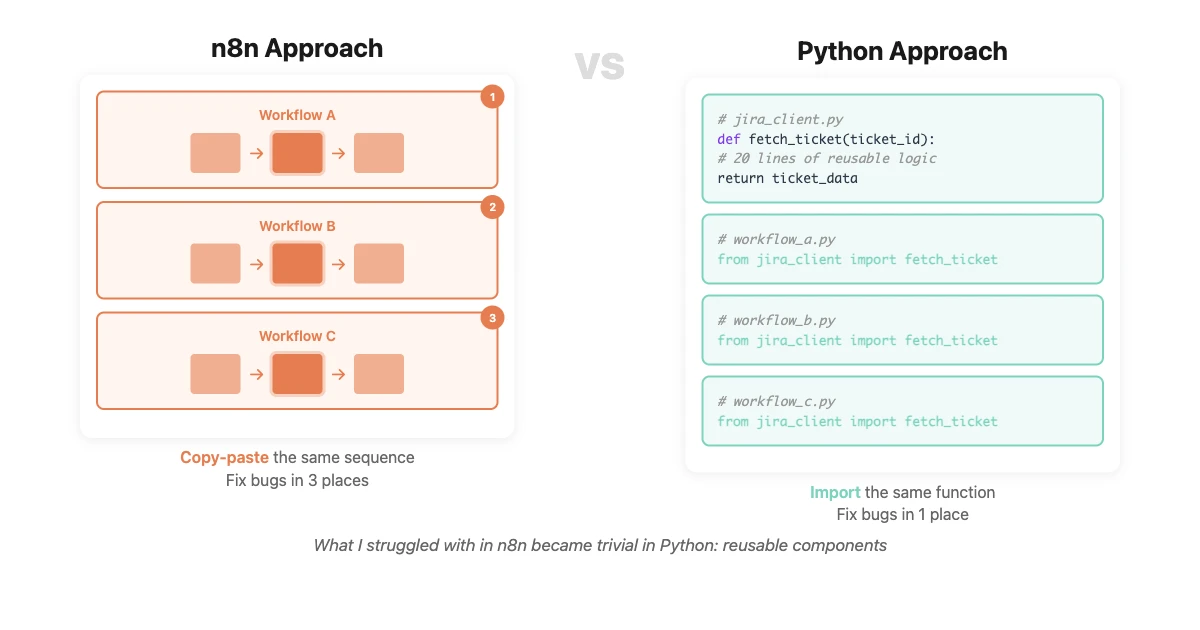

The problems started small. I needed to reuse a "fetch Jira ticket" sequence in multiple workflows. In code, that's a function. In n8n, it meant copying 6 nodes between workflows. When I fixed a bug in one place, I had to remember to fix it in three others.

I tried the proper solution. n8n has a concept called "subworkflows" for exactly this: reusable modules you can call from other workflows. I spent hours trying to get them working. They either didn't work at all, or worked inconsistently. I couldn't figure out why.

Maybe I was doing it wrong. Maybe I needed to study the documentation more deeply. But that was the problem: I was fighting the tool instead of solving the problem.

The irony of no-code tools: they promise to lower the barrier to automation. But visual workflows can create their own form of complexity, one that's harder to manage than code.

The Lesson Isn't "Code > No-Code"

I want to be clear: this isn't an anti-no-code story. n8n is a powerful tool. I still use it for simpler automations, things that genuinely benefit from visual representation.

For rapid prototyping, one-off integrations, or non-technical teams, I'd probably still reach for n8n first. The visual model is powerful when it fits the problem.

The lesson is subtler: the right tool is the one that gets out of your way.

Research on developer productivity consistently shows that tool friction is one of the top sources of frustration. When developers spend more time fighting their tools than solving problems, productivity tanks. But more importantly, motivation suffers.

No-code tools shine when:

- The problem is naturally visual (data flows, state machines)

- Non-technical stakeholders need to understand or modify the system

- The workflow is relatively simple (under 10 steps)

- Integration between existing services is the main value

Code makes more sense when:

- You need complex logic or conditional flows

- Reusability and maintainability matter

- You're building something that will evolve significantly

- The team building it already writes code

For me, the n8n system crossed a threshold where visual representation became a liability instead of an asset. Your threshold might be different.

Questions to ask about your tools:

- Am I fighting the tool or using it?

- Is the abstraction helping or hiding?

- Can I debug when things break?

- Am I spending more time on the tool than the problem?

- Would this be simpler in a different medium?

The Turning Point

I sat with that frustration for a week. Was I just not learning the tool properly? Was I missing something obvious? n8n has an active community, and surely other people were building complex systems with it.

Maybe they were. But the moment I knew I'd crossed a line wasn't when something broke. It was when I caught myself dreading opening n8n, when fixing a simple bug felt harder than reimagining the entire system.

I realized I was fighting two things:

-

The abstraction was hiding, not helping. Visual workflows are great for understanding flow. But when you need to see why something happens, you're clicking through property panels instead of reading code.

-

I couldn't leverage my existing skills. Twenty years of programming muscle memory (refactoring, debugging, testing) all became irrelevant. I was learning a new way to think instead of applying what I already knew.

Eventually, I made a call: I'd rebuild it in Python. Familiar territory. Maybe boring, but sometimes boring is exactly what you need.

Back to Code (In One Day)

Here's what surprised me: the Python rebuild took one day.

Not three weeks. Not even three days. One day, working with Claude Code.

I described what the system needed to do. Claude scaffolded the structure: file selection logic, LLM analysis wrapper, Jira client, state management. I reviewed, adjusted, tested. By evening, I had a working system.

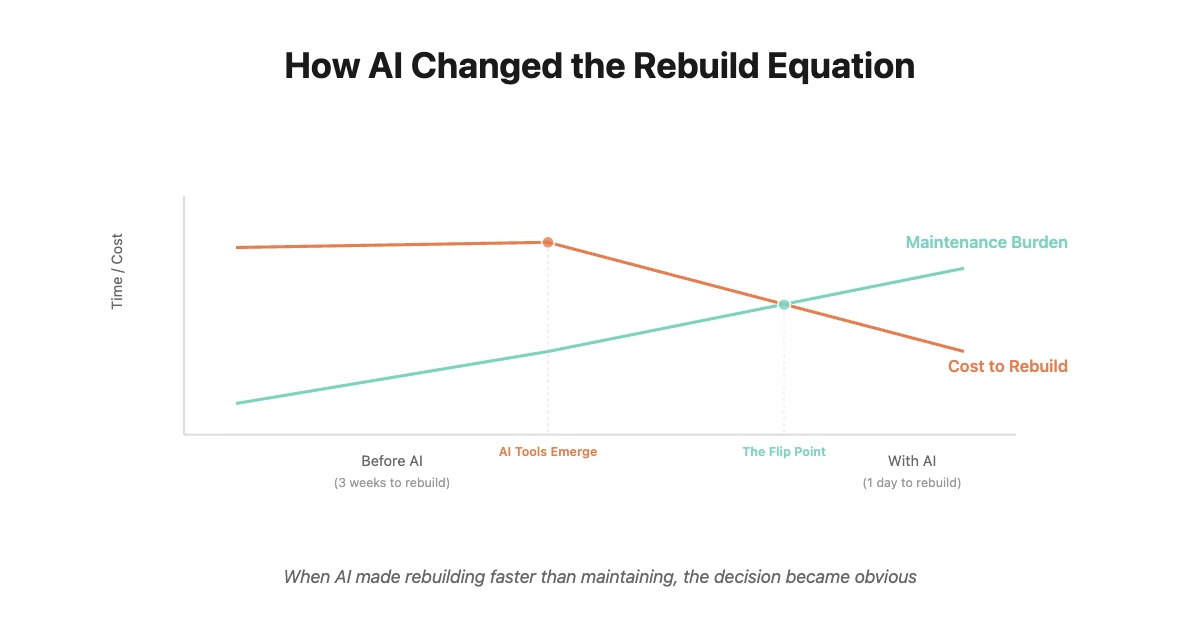

This is the part that still amazes me: AI assistants didn't just make coding faster. They changed the economics of "should I rebuild this?"

Five years ago, the decision would have been obvious: stick with n8n. Rebuilding would take weeks. The sunk cost would be too high. Today, with AI pair programming, rebuilding took less time than I'd spent debugging subworkflows.

The cost equation flipped.

Here's what I built: the same PR review system, but in Python.

Stage 0: PR Monitoring & Context Gathering

- Fetch open PRs from GitHub CLI

- Extract Jira tickets from branch names (reusable function!)

- Pull ticket details and acceptance criteria via Jira API

- Analyze git history for modified files

- Generate contextual questions

- Post to PR as comments

Stage 1: Architecture Review

- Run Claude Code CLI analysis on PR changes

- Score against quality criteria (DRY, separation of concerns, patterns)

- Quality gate at 7.0 threshold

- Provide improvement guidance if below threshold

Stage 2: Detailed Reviews

- Documentation review with mentoring

- Testing coverage analysis

- Learning opportunities identification

- Generate fix PRs where appropriate

The same workflow. The same stages. The same logic.

But now it's functions instead of visual nodes. The "fetch Jira ticket" logic I'd struggled to reuse in n8n? It's a 20-line function I can import anywhere.

While I was at it, I also built a separate code quality analysis system: proactive issue detection that runs on a schedule, creates Jira tickets automatically, with per-repository limits and deduplication. That took another day.

But the core point: rebuilding the PR review system took one day. The system is now production-ready. Here's what really changed:

What code gave me back:

- Reusability: Functions instead of copied node sequences

- Debuggability: Stack traces instead of clicking through nodes

- Version control: Meaningful git diffs instead of JSON exports

- Testing: Unit tests for each component

- Collaboration: Other developers can read and contribute

The Python version is actually more sophisticated than the n8n version. But it feels simpler because the complexity is manageable.

The Leadership Angle

Here's where this becomes about more than just tools: knowing when to simplify is a leadership skill.

I invested weeks building the n8n system. Admitting it wasn't working meant admitting that time was sunk. The temptation was to push through: "I just need to learn this better," "I'm almost there," "It would be wasteful to start over."

Sunk cost thinking keeps us locked into suboptimal paths.

The harder question: How do you know when to push through complexity versus when to pivot?

I don't have a formula. But here's what helped me:

-

Notice the emotional tenor. Was I energized when working on the system, or drained? Energy signals alignment. Dread signals friction.

-

Track time-to-value ratio. How much time was I spending maintaining the system versus building new value? When maintenance exceeds creation, something's wrong.

-

Ask: Would I choose this tool again? If I were starting fresh today, knowing what I know now, would I pick the same approach? If not, that's information.

The pivot cost me one day. I got back hours every week in reduced maintenance burden. The ROI was immediate.

The AI Assistant Factor

Here's something I'm still processing: AI coding assistants have fundamentally changed the "rebuild vs. refactor" calculation.

The old wisdom was: never rewrite from scratch. Refactor incrementally. Rewrites are expensive and risky. That wisdom made sense when rewrites took months and usually failed.

But when a rebuild takes one day? The calculation changes.

I'm not suggesting we rewrite everything at the first sign of friction. But the threshold for "is it worth rebuilding?" has shifted dramatically. The cost of trying is low enough that experimentation becomes viable.

This connects to something I wrote about earlier: when the cost of experimentation drops below the cost of coordination, our workflow patterns change. The same principle applies to tooling choices.

What I'd Do Differently

Honestly? I'm glad I built the n8n version first.

It taught me what the system needed to do. I discovered the edge cases, the error handling, the state management problems. When I rebuilt in Python with Claude's help, I wasn't designing from scratch. I was translating a working system into a better medium.

The n8n version was my prototype. The Python version is my production system. Maybe that's the pattern: use no-code to discover what you need, then rebuild in code when you understand the problem.

If you're considering a similar pivot:

-

Start with the smallest viable version in the new tool. Don't rebuild everything at once. Prove the new approach works for one workflow.

-

Run both systems in parallel for a week. Compare maintenance burden, debugging ease, and team understanding.

-

Measure the friction. Track how long it takes to make a change in each system. If the new approach isn't clearly better, maybe the problem isn't the tool.

The Question I'm Still Sitting With

Automation is supposed to reduce complexity, not create it. But every automation tool introduces its own complexity. The question is whether that complexity is manageable or compounding.

I chose Python because its complexity felt manageable to me. Someone else might find n8n's visual model more intuitive. A third person might prefer Make.com or Zapier or custom bash scripts.

The tool matters less than the match. Between your problem, your skills, and your tool's affordances.

What I'm learning: the best automation is the one that still makes sense to you six months later when something breaks.

When you open that workflow at 3am because the system is failing, will you understand what you built? Will you remember why you made certain choices? Will you be able to fix it without excavating layers of abstraction?

That's the test.

What tools are you using that feel like they're fighting you? Where have you chosen complexity over simplicity, and why?

I'd be curious to hear what you're seeing in your own automation work. Sometimes the most productive thing we can do is simplify.

Aleksi

Product Owner at Silverbucket, building at the intersection of AI, product craft, and team culture. Based in Tampere, Finland.